Bioinformatics on Flyte: Read Alignment

Flyte presents an excellent solution for the complexities inherent in bioinformatics workflows. Tailored to abstract the intricacies of compute resources, Flyte allows bioinformaticians to focus on defining and executing reproducible workflows without grappling with underlying infrastructure details. Offering scalability and efficient distributed computing, Flyte proves advantageous for processing large datasets in parallel. Its cloud-native design facilitates seamless integration with cloud services, while its extensibility and customization support the incorporation of diverse tools and algorithms. This post is based largely on the evolving variant discovery workflow, specifically focusing on quality control, preprocessing, and alignment. We’ll explore a number of Flyte features which make it uniquely suited to these tasks.

Custom dependencies

While Flyte tasks are predominantly defined with Python, there are a number of abstractions which allow us to use any tool we like. A lot of common bioinformatics tools are written in languages like Java, C++, Perl, and R. As a container and k8s native orchestrator, we can easily define a Dockerfile that incorporates whatever dependencies we may need. You can build out the default Dockerfile that ships with `pyflyte init` to add custom dependencies. The project’s Dockerfile incorporates a number of common bioinformatics tools to create a base image for use in tasks. Here’s samtools for example, a utility for interacting with alignment files:

Any additional python dependencies can also be specified in the requirements.txt file, which gets pulled into and installed in the final image. You'll also be able to use fast registration as you iterate on your code to avoid having to rebuild and push an image every time.

Flyte uses an object store, like S3, for storing all of it’s workflow files and any larger inputs and outputs. While we’re used to working with a traditional filesystem in bioinformatics, pointing tools to files and directories explicitly, Flyte makes this much more flexible. Since each task is run in it’s own container, those files and directories can be pulled in and placed wherever at the task boundary, greatly simplifying path handling. Additionally, we can create dataclasses to define your samples at the workflow level to provide a clean and extensible data structure to keep things tidy. Here’s the dataclass for capturing the outputs of fastp:

Instead of writing to directories and keeping track of things manually on the commandline, these dataclasses will keep relevant metadata about your samples and let you know where to find them in object storage.

Pre-processing & QC

Not only can we incorporate any tools we need into our workflows, but there are also a number of ways to interact with them. FastQC is a very common tool for gathering QC metrics about raw reads. It's a java tool and included in our Dockerfile. It doesn't have any python bindings, but luckily Flyte lets us run arbitrary ShellTasks with a clean way of passing in inputs and receiving outputs. Just define a script for what you need to do and ShellTask will handle the rest.

An additional benefit of defining the FastQC output explicitly is that the reports are then saved in the object store, allowing a later task to quantify them longitudinally to see sequencing performance over time.

We can also use the FastQC outputs immediately in our workflow in concert with conditionals to control execution based on input data quality. FastQC generates a summary file with a simple PASS / WARN / FAIL call across a number of different metrics, our workflow can check for any FAIL lines in the summary and automatically halt execution.

This can surface an early failure without wasting valuable compute or anyone's time doing manual review.

Next up, we’ll incorporate fastp, a bioinformatics tool designed for the fast and flexible preprocessing of high-throughput sequencing data. It is specifically developed for tasks such as quality control, adapter removal, and filtering of raw sequencing reads. It can be a bit more memory hungry than Flyte's defaults are set to; luckily we can use Resources directly in the task definition to bump that up and allow it to run efficiently.

Additionally, we can make use of a map task in our workflow to parallelize the processing of fastp across all our samples. Using a map task we can easily take a task designed for 1 input (although multiple are allowed via `functools.partial`) and 1 output, and pass it a list of inputs.

These get parallelized seamlessly across pods on the Flyte cluster, freeing up the need to manually manage concurrency.

As a final check before moving onto the alignment, we can define an explicit approval right in the workflow using Flyte’s Human-in-the-Loop functionality. Aggregating reports of all processing done up to this point, and visualizing it via Decks (more on that later), a researcher is able to quickly get a high level view of the work done so far. Please see this more in-depth write up around this functionality for details.

The workflow will pause execution and a modal in the console will prompt for approval before moving on to downstream processing. Subsequent steps could be anything from analyzing gene expression to classifying bacterial populations in a metagenomics study. In this case we’ll be moving on to variant calling to identify where a sample differs from the reference. This will provide us with salient locations to investigate disfunction.

Finally, I’d like to call out that we’re using Flyte’s native entity-chaining mechanism via the `>>` operator.

This explicitly enforces the order of operations without having to rely on the downstream tasks consuming upstream inputs, giving us even more flexibility in how execution proceeds.

Index generation & alignment

Alignment tools commonly require the generation of a tool-specific index from a reference genome. This index allows the alignment algorithm to efficiently query the reference for the highest quality alignment. Index generation can be a very compute intensive step. Luckily, we can take advantage of Flyte's native caching when building that index. It’s of course possible to define that index offline and upload it to the object store beforehand since it will seldom change. However, when rapidly prototyping different tools to include in a pipeline, taking advantage of this built in functionality can be a huge speed boost. We've also defined a `cache_version` in the config that relies on a hash of the reference location in the object store. This means that changing the reference will invalidate the cache and trigger a rebuild, while allowing you to go back to your old reference with impunity. Here’s the ShellTask for Hisat2’s index:

Finally we arrive at the most important step: the alignment itself. It's usually a good idea to evaluate a few different tools to see how they perform with respect to runtime and resource requirements. This is easy with a dynamic workflow, which allows us to pass in an arbitrary number of inputs to be used with whatever tasks we want. Since their DAG is compiled at run time, we can nest dynamic workflows within static workflows, giving us a lot more flexibility in workflow definition. We’ll be comparing 2 popular aligners with fairly different approaches under the hood, bowtie2 and hisat. In the main workflow you'll pass a list of filtered samples to each tool and be able to capture run statistics in the SamFile dataclass as well as visualize their runtimes in the Flyte console.

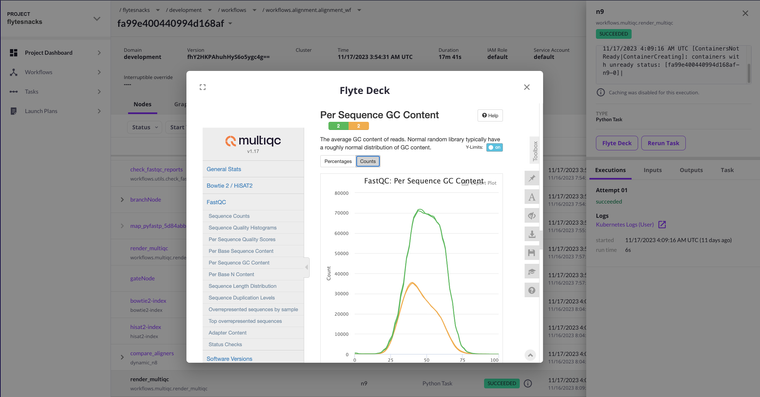

As a last step, we can generate a similar report as we used earlier in the approval step, now enhanced with alignment statistics from both aligners. We’re using MultiQC here, an excellent report aggregation and visualization tool to accomplish this.

Although this was in play earlier, it’s worth mentioning that this dependency was added in-line and built on the fly via ImageSpec. This allows you to build or enhance existing Docker images without ever having to touch a Dockerfile. Implementation is as easy as:

After gathering all relative metrics from the workflow, we're able to render that report via Decks, giving us rich run statistics without ever leaving the Flyte console!

Summing up

Flyte offers a number of excellent utilities to carry out bioinformatics analyses. You can bring your own tried-and-true tools and quickly compare them without having to shoehorn them into a new format. With an abstracted object store you can forget about messy path handling and manage all your datasets with rich metadata in one single, declarative place. As you move to production, Flyte has a plethora of options for resource management, parallelization, and caching to get the most bang for your buck regarding time and compute. With versioning across the board and strongly typed interfaces between tasks, you can also unburden yourself from time-wasting errors and awkward code revisions. Finally, the Flyte console has a number of powerful conveniences that let you view and interact with your workflow in unprecedented ways. All of these things together make for straightforward, robust research; unlocking what really matters: actionable insights, sooner.